In the ever-evolving world of web development and SEO, robots.txt plays a vital role in determining which pages on your website should or should not be crawled by search engines. This small yet essential text file helps search engine bots, like Googlebot, navigate your site more efficiently by directing them on which pages to crawl and index.

The robots.txt file serves as the gatekeeper for search engine crawlers, ensuring that valuable resources are indexed while preventing the indexing of low-value or duplicate content. If you manage a website, understanding how to create and configure your robots.txt file is crucial for optimizing your site’s SEO and performance.

In this guide, we will dive deep into everything you need to know about robots.txt, its syntax, commands, best practices, common errors, testing tools, and more.

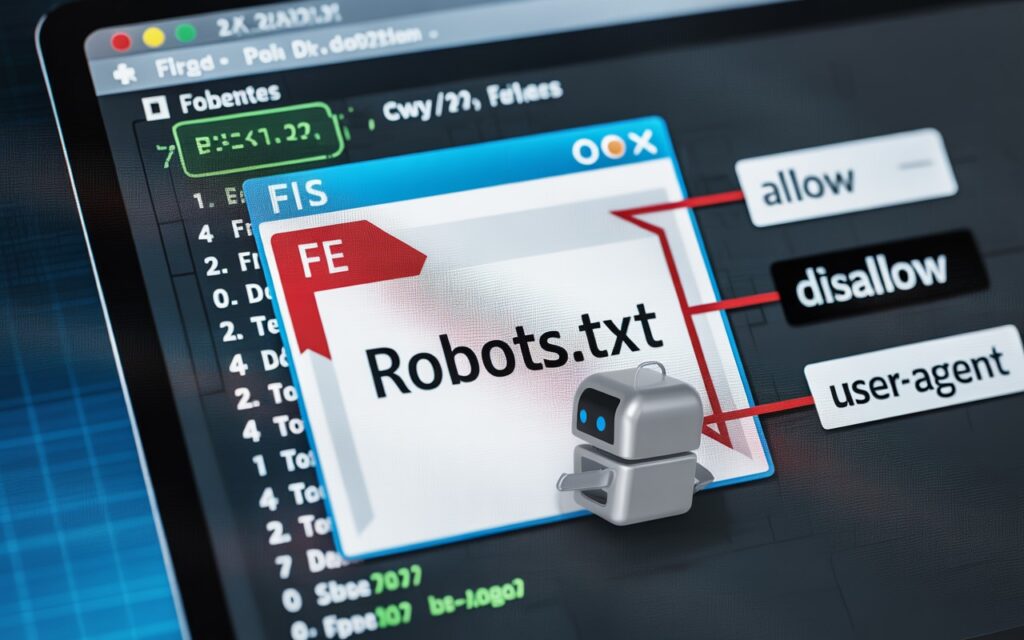

What is Robots.txt?

The robots.txt file is a text file that is placed in the root directory of a website. It is used to instruct web robots or crawlers, such as those used by search engines like Google, Bing, and Yahoo, about which pages they are allowed or disallowed to crawl.

The primary purpose of robots.txt is to manage web traffic and to prevent overloading the server with unnecessary requests by limiting which pages the bots can access.

Example Robots.txt File

User-agent: *

Disallow: /private/

Allow: /public/

Sitemap: http://example.com/sitemap.xml

In this example, Googlebot and other crawlers are instructed not to crawl any pages within the /private/ directory, but they are allowed to crawl the /public/ directory. The Sitemap directive provides the location of the sitemap for crawlers.

Understanding the structure and syntax of the robots.txt file is key to its proper configuration. Here’s a breakdown of some essential components:

Key Directives in Robots.txt

User-agent: Specifies which web crawlers the rule applies to. For example,

User-agent: Googlebottargets only Google’s crawler, whileUser-agent: *applies to all crawlers.Disallow: Tells web crawlers not to visit a certain directory or page. For example,

Disallow: /private/will block crawlers from accessing the/private/folder.Allow: Opposite of Disallow; it specifies which pages or directories a crawler can visit even if other rules disallow access.

Sitemap: Indicates the location of the sitemap file, which helps crawlers better navigate your website.

Here’s an example of a more detailed robots.txt file format:

User-agent: *

Disallow: /private/

Allow: /public/

Disallow: /admin/

Sitemap: http://example.com/sitemap.xml

This file instructs all bots to avoid the /private/ and /admin/ directories, while allowing access to the /public/ directory.

Disallow

The Disallow directive is one of the most commonly used commands in robots.txt. It restricts access to certain pages or directories from crawlers.

For example:

User-agent: *

Disallow: /admin/

This blocks all crawlers from accessing the /admin/ directory.

Allow

The Allow directive is used to override a Disallow directive. It can be helpful when you want to block a whole directory but allow specific files.

For example:

User-agent: *

Disallow: /private/

Allow: /private/public-page.html

This prevents crawlers from accessing any page within the /private/ folder, but it allows access to the public-page.html.

Noindex

While not typically included in robots.txt, Noindex is a meta tag that should be used in HTML headers if you want to prevent pages from being indexed in search engines.

Using Robots.txt to Block Specific Bots and User Agents

Blocking Search Engine Bots

You can block specific bots by using their user-agent string. Here’s an example of blocking Googlebot:

User-agent: Googlebot

Disallow: /

This directive tells Googlebot to avoid crawling the entire site. Similarly, you can block other search engines, like Bing or Yahoo, by specifying their user-agent strings.

Blocking Crawlers from Specific Content

Robots.txt is useful for blocking sensitive areas of your site, like the admin panel or user data. For example:

User-agent: *

Disallow: /admin/

Disallow: /user-data/

This ensures crawlers won’t visit private pages and reduces the chances of exposing sensitive content.

Robots.txt Best Practices

To ensure your robots.txt file works effectively for your site, here are some best practices:

Avoid Blocking Important Pages: Be cautious not to block important pages from being crawled. For example, blocking product pages or blog posts could hurt your SEO rankings.

Don’t Overuse Disallow: Using too many Disallow rules could prevent search engines from crawling valuable content.

Keep Robots.txt Simple: Maintain a clean and organized robots.txt file to avoid confusion and errors. Use clear directives and avoid unnecessary complexity.

Limit the Use of Disallow: Only disallow pages that are redundant or irrelevant for SEO purposes, such as admin panels or duplicate content.

Robots.txt Testing and Validation

After creating or updating your robots.txt file, it’s essential to test it to ensure it’s working as expected. Several tools are available to help you verify the contents and syntax of your file.

Using the Robots.txt Tester

Google Search Console offers a Robots.txt Tester tool that allows you to check if your robots.txt file is properly configured. The tester provides instant feedback on any syntax errors and shows which pages are being blocked or allowed.

Common Errors and How to Fix Them

Syntax Errors: Ensure that the file is formatted correctly, especially when it comes to spacing, punctuation, and line breaks.

Incorrect User-Agent Entries: Make sure the user-agent matches the bots you want to target. Googlebot’s user-agent is specific, and using the wrong one may result in unintended blocking or access.

How to Create and Generate Robots.txt

Creating and managing a robots.txt file can be done manually, or you can use various tools available online. If you’re not familiar with coding or want an easy approach, consider using a robots.txt generator.

Manual Creation of Robots.txt

To manually create a robots.txt file:

Open a text editor (like Notepad).

Type your desired rules using the correct syntax.

Save the file as

robots.txtand upload it to your website’s root directory.

Using a Robots.txt Generator

For those who prefer not to write robots.txt manually, you can use a robots.txt generator. This tool simplifies the process by automatically creating the file based on the options you select.

Here’s how the process works:

Go to the Robots.txt Generator page.

Choose the rules you want to apply.

Download and upload the generated robots.txt file to your site.

Robots.txt and SEO

A properly configured robots.txt file is crucial for SEO because it can influence how search engines crawl and index your site. Here’s how robots.txt impacts SEO:

Blocking Low-Value Pages: Prevent search engines from indexing duplicate or low-value pages like login pages, admin areas, or thank-you pages, which can dilute SEO efforts.

Improved Crawl Efficiency: By blocking crawlers from unnecessary resources, you ensure that search engines spend more time crawling the important content on your site.

Preventing Duplicate Content Issues: If your site has multiple URLs with the same content, blocking duplicate pages in your robots.txt file can help you avoid SEO penalties related to duplicate content.

Advanced Robots.txt Use Cases

Blocking Specific Bots

If you want to block a particular bot, you can target its user-agent specifically. For example:

User-agent: BadBot

Disallow: /

This prevents BadBot from crawling your site, while other bots can still access it.

Robots.txt with WordPress

WordPress automatically generates a robots.txt file for you, but it may not be fully optimized. You can customize it by creating a robots.txt file in the root directory of your WordPress installation.

Conclusion

Your robots.txt file is an essential tool in SEO, helping you control how search engine crawlers interact with your site. By following best practices, testing your file, and using online tools like the Robots.txt Tester and Robots.txt Generator, you can ensure your robots.txt file is configured correctly and optimally for search engines.

Regularly review and update your robots.txt file to make sure it aligns with your SEO strategy and keeps your website running smoothly. Proper use of robots.txt can contribute to a better search engine ranking, better crawling efficiency, and ultimately, a more effective website.

Frequently Asked Questions about Robots.txt

A robots.txt file is a simple text file placed at the root of your website that tells search engine bots which pages or sections they are allowed or not allowed to crawl.

Robots.txt helps you control which parts of your site search engines can access, reducing crawl waste and protecting private or duplicate content from being indexed.

You can create a robots.txt file using any plain text editor. Save the file as robots.txt and upload it to the root directory of your website (e.g., www.example.com/robots.txt).

You can test your robots.txt file using tools like Google Search Console’s Robots.txt Tester or third-party tools like the one on this page.

Yes. While Google automatically checks your robots.txt file, you can manually submit and test it via Google Search Console.

If your WordPress theme or SEO plugin doesn’t allow direct editing, you can modify the file via FTP or a file manager in your hosting panel.

When a search engine visits your site, it first checks the robots.txt file to see what it’s allowed to crawl. If certain pages are disallowed, the bot skips them.

This means Google indexed a page even though it was blocked by robots.txt. To prevent indexing, use a noindex meta tag instead of relying solely on robots.txt.